Authors: Peter Abrell (Vision Electric Super Conductors), Karl Rabe (Wooden Data Center)

Throughout the last 20 years, no other industry sector has experienced as much growth and value as technology companies. Companies like Google, Apple and Amazon have become global players and are among the highest valued companies worldwide. With the help of technological and communication advancements, our lives have become increasingly interconnected, and more of this interconnection is taking place online.

Data centers are at the heart of modern commerce. They are in essence structures that use large amounts of power to store, relay and transmit large amounts of information across the world. Located in regions with access to sufficient power, data centers need a high reliability to ensure 24/7 uptime. At this moment, there is currently 1.3 GW of IT power installed in Germany and an additional 2 GW will be installed by 2029 [1]. According to the study by the Germany Data Center Association, this increase equates to an investment of around 24 billion EUR in the next 5 years.

The power per rack has been increasing steadily throughout the years. With the surge of AI technology, it is looking to increase further. Power ratings of 200 kW per rack are envisioned in the near future. Dealing with a thermal load of this caliber is not possible with conventional fan‑cooled servers, so data center builders e switching to liquid cooling. The new energy efficiency law in Germany is additionally pushing data center operators to become more efficient. PUEs of 1.2 and a heat reuse of 20% will become mandatory for new data centers by 2030.

Meeting the efficiency goals with conventional AC-technology is challenging data center operators. To increase efficiency with conventional (copper and aluminum) technology, the voltage is increased to reduce the operating current and the resulting heat generation. Concepts are emerging to use MV (medium voltage) cables inside the data center and moving the UPS (uninterruptable power supply) to MV as well. This will increase the size and cost of data center and connected converters.

Reducing the cooling power and letting the hardware operate at higher temperatures is another way to decrease PUE. This trades higher efficiency in the short term for reduced lifetime of IT hardware, not a sustainable solution. Liquid cooling, either immersed or direct to chip, is another alternative, as it is more efficient than fan‑cooling servers and offers higher grade heat for heat reuse.

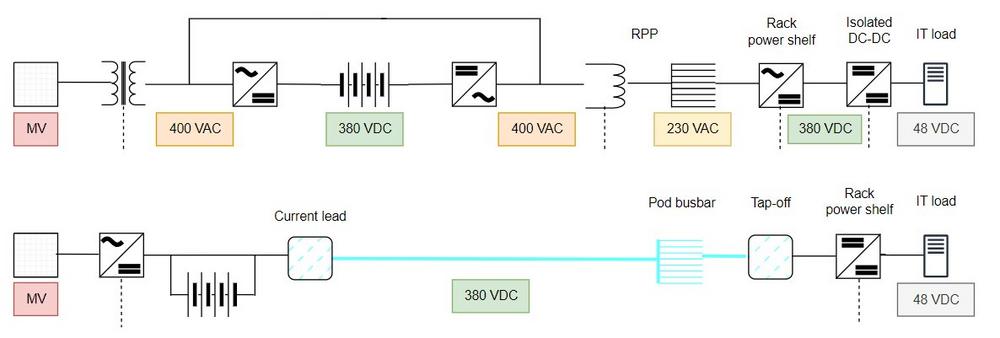

Current data centers have a power density of around 10‑20 kW/m2, 30-40 kW/m2 for high performance computing (HPC) data centers. They traditionally use an AC power distribution system, while back-up batteries and servers operate on DC (direct current). This mismatch results in multiple voltage levels, various AC/DC conversions and additional heat generation that needs to be dissipated. The MV is supplied by the attached utility grid, then transformed down and distributed to the power distribution units (PDUs). From here the power is routed to the individual RPPs (remote power panel) and finally rectified to DC in the rack’s power shelves. From the MV connection, the power goes through three transformations and each step has a not‑so‑insignificant power loss.

However, there is a way to eliminate the transformation losses and decrease the electric losses at the same time: by using DC distribution voltage and superconductors. A DC power distribution system will make conventional, compulsory infrastructure obsolete and reduce the amount of cooling power required, which will increase efficiency and reduce material costs.

The solution: EOS Data center

Superconductors have no DC-resistance and extremely high current densities, which means the distribution voltage level can be set at the voltage of the UPS (380 V). Since the IT hardware runs on DC anyway, a single DC-DC power shelf supplies the rack with power. The differences in the two infrastructures can be seen in Figure 1.

The cooling is done via a current of liquid nitrogen (LN2) that is pumped through the distribution line to the tap-offs and back to the cooling machines. Assuming a liquid cooling of the racks, a heat reuse can be implemented that uses the heat from the IT-hardware as well as the heat from the cooling machines, allowing for incredible efficiencies. The reduction of components also increases the reliability of the system, as less components translate to less failures.

All these advantages are brought together in the EOS data center. The concept consists of pods that house 32 120 kW racks, 16 per aisle. Two superconducting distribution systems feed a total of 14 pods resulting in a total power of 55 MW. Each superconducting distribution is designed to supply the whole data center with power, establishing a power redundancy. Inside the pods, a tap-off box connects directly to the racks. The 380 VDC distribution voltage is stepped‑down by a DC-DC power shelf.

EOS combines this electrical infrastructure with sustainable building materials of the Wooden Data Center and liquid cooling system that uses state of the art adiabatic coolers. A heat reuse will be in affect and the heat output of the cooling machines will be integrated. This increases sustainability throughout all scopes.

Comparison to conventional AC system

Comparing the systems can be done with using rough order of magnitudes. First off, the AC-system will include 5 % conversion losses [2], due to the AC-DC conversion in the UPS and power shelf converters. Two different modules will be defined, the distribution inside the pod and the distribution to the pods.

The CAPEX of the AC system will consist of copper conductor costs. The size and weight of the copper will be calculated with the distribution length and by assuming a current density of 1.2 A/mm2. This not only calculates a total weight, but also the electrical resistance, which will be used to estimate the electric losses. The voltage of the AC distribution to the pods will be 400 VAC and inside the pods at 230 VAC. A price of 15 EUR/kg will be assumed for the copper conductors.

The superconducting system will consist of four main parts: the HTS conductors, the cryostat, the current leads/tap-offs and a cooling station. The heat leak of the superconducting distribution system is assumed to be 2 W/m, with the current lead and tap-offs needing 50 W/kA cooling power. The cooling demand is added together and divided by the efficiency of 7%, to estimate the electricity cost at room temperature. A current lead will be estimated at 105,000 EUR and a tap-off at 10,000 EUR. Cryostat cost will be estimated at 500 EUR/m, HTS costs at 150 EUR/kAm.

Compared to a conventional AC-system, there is an increased CAPEX consider. The cooling station, thermal insulation and superconductors result in a higher upfront cost than a conventional copper system. However, the OPEX is much lower, resulting in a payback time of under two years. The superconducting distribution will also save space, allowing for unprecedented power density inside the data center and reducing land cost.

The simplified DC infrastructure is another added benefit, transformation losses inside the data center are avoided. There are multiple converters and power shelves that can step-down this voltage to supply the IT equipment. Furthermore, this voltage level is in line with the recently created norm for DC-power cords [IEC TS 63236]. The DC grid can be expanded to include PV arrays, electrolyzers and fuel cells. These are components that are inherently DC. Wind power can also be included on a DC basis. Wind turbine power trains have a DC rectification stage before converting to 50/60 Hz AC. An implementation at this rectification stage would save further transformation losses. Sourcing renewable energies within this grid will be highly efficient and operators can consider implementing an “energy as a service” system. The low operating costs would make it more profitable than conventional counterparts.

Conclusion

Due to recent developments rack power inside the data center is increasing and taking the conventional technology to its limits. This is where superconductors can enable and improve data centers. The EOS data center concept was described, and a cost estimation was done comparing the system to a conventional data center. It was shown that superconductors can eliminate the electric distribution losses inside the data center and enable high rack power without bringing medium voltage into the data center.

There are even more benefits. Scalability is a major characteristic to consider. The higher the power, the more economical a superconducting data center will become. Including renewables or expanding the superconducting grid to local prosumers will generate even more value. The power density of the superconducting distribution and liquid cooling will allow high power densities inside the white space. The Wooden Data Center design and connected renewables will create a net-zero data center. All these advantages show that superconductors possess a game‑changing solution to the challenges of the data center industry.

References

[1] German Data Centers, "Data center impact report Deutschland," 2024. [Online]. Available: https://www.germandatacenters.com/….

[2] J. V. Minervini, "Superconducting Power Distribution for the Data Center," in 3rd Annual Green Technology Conference, New York, 2010.

[3] U. T. T. M. H. B. P. B. S. J. a. R. T. B. R. Shrestha, "Efficiency and Reliability Analyses of AC and 380V DC Distribution in Data Centers," IEEE Access, no. 4, 2016.

[4] P. K. a. T. V. A. A. Pratt, "Evaluation of 400V DC distribution in telco and data centers to improve energy efficiency," Proc. 29th IEEE Int. Telecommun. Energy Conf., pp. 32 – 39, 2007.

[5] N. R. a. J. Spitaels, "A quantitative comparison of high efficiency AC vs. DC power distribution for data centers," American Power Conversion (APC) White Paper, no. 127, 2007.

[6] J. Stark, "380 V DC Power: Shaping the Future of Data Center energy Efficiency," Industry Perspectives, June 2015.

[7] D. Sterlace, "Direct current in the data center: are we there yet?" ABB, Jan. 2020.

[8] F. S. A. Grüter, "Advantages of a DC power supply in data center," 2012.

[9] M. Ton, B. Fortenbery and W. Tschudi, "DC Power for Improved Data Center Efficiency," Lawrence Berkely National LAboratory, March 2008.

Vision Electric Super Conductors GmbH (VESC), Kaiserslautern, Germany, founded in 2013, develops superconducting busbar systems for trasmisson of electricity at high power and low voltage levels.

Our vision is to use superconducting power transport systems to accelerate the electrification of the world to a higher and more efficient level. Superconductors contribute to a sustainable environment for us and future generations.

Our solutions complement conventional high-current busbar technology. VESC focuses on application projects with special requirements on busbar systems and cables, in terms of energy efficiency , construction effort and personal protection. The company’s founder, Dr. Wolfgang Reiser, is one of the recognized experts in high-current supply systems with global project experience and was founder and co-owner of of the technology companies for high-current applications, Vision Electric GmbH and EMS Elektro Metall Schwanenmühle GmbH.

Vision Electric Super Conductors GmbH

Morlauterer Str. 21

67657 Kaiserslautern

Telefon: +49 631 627983-0

Telefax: +49 631 627983-19

http://www.vesc-superbar.de/

Projektingenieur

E-Mail: abrell@vesc-superbar.de

![]()